You’ve reached Day 21! Throughout this challenge, as you’ve explored different uses of AI in Testing, you’ve uncovered its many associated pitfalls. To successfully integrate AI into our testing activities, we must be conscious of these issues and develop a mindful approach to working with AI.

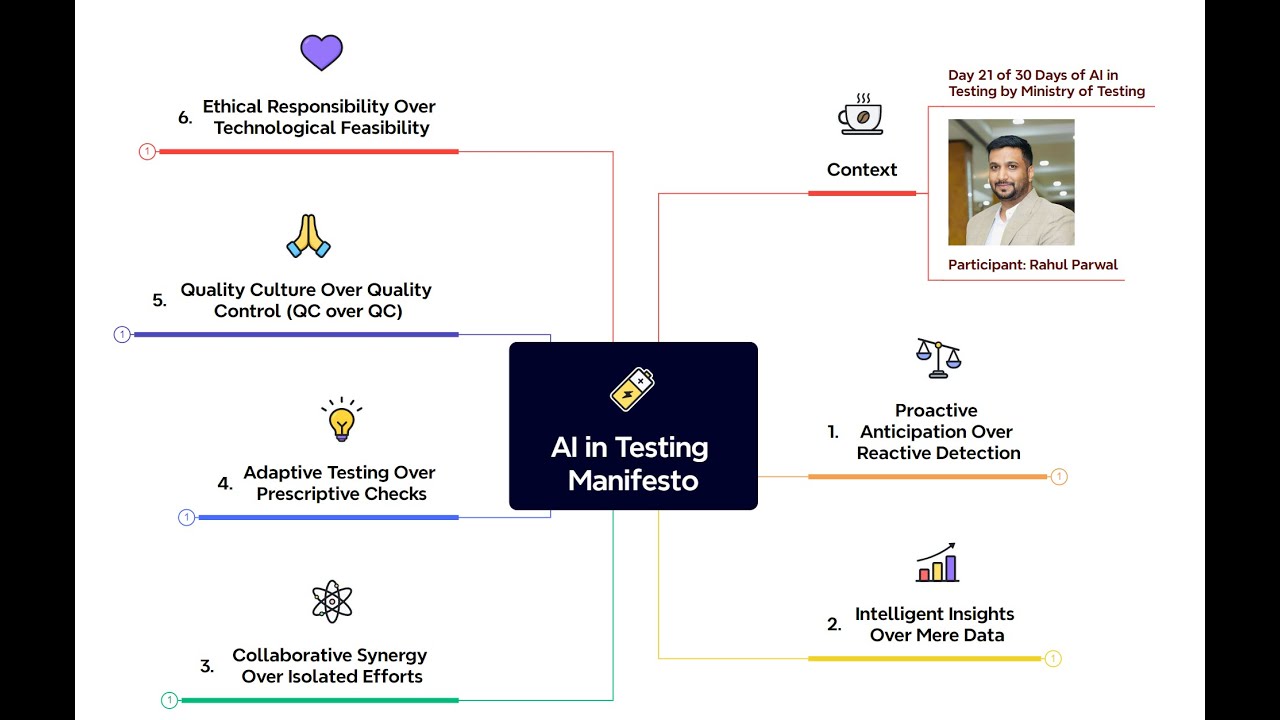

Today, you’re going to craft a set of principles to guide your approach to working with AI by creating your own AI in Testing Manifesto.

To help shape your manifesto, check out these well-known manifestos in the testing world:

- Agile Manifesto - Beck et al.: This manifesto emphasises values such as prioritising individuals and interactions over processes and tools, working software over comprehensive documentation, customer collaboration over contract negotiation, and responding to change over following a plan.

- Testing Manifesto - Karen Greaves and Sam Laing: This Manifesto emphasises continuous and integrated testing throughout development, prioritises preventing bugs, and values deep understanding of user needs. It advocates for a proactive, user-focused approach to testing.

- Modern Testing Principles - Alan Page and Brent Jensen: These principles advocate for transforming testers into ambassadors of shippable quality, focussing on value addition, team acceleration, continuous improvement, customer focus, data-driven decisions, and spreading testing skills across teams to enhance efficiency and product quality.

Task Steps

-

Reflect on Key Learnings: Review the tasks you’ve encountered and consider the opportunities, potential roadblocks, and good practices that emerged.

-

Consider Your Mindset: What mindset shifts have you found necessary or beneficial in working with AI?

-

Craft Your Personal Set of Principles: Start drafting your principles, aiming for conciseness and relevance to AI in testing. These principles should guide your decision-making, practices, and attitudes towards using AI in your testing. To help, here are some areas and questions to consider:

- Collaboration: How will AI complement your testing expertise?

- Explainability: Why is understanding the reasoning behind AI outputs crucial?

- Ethics: How will you actively address ethical considerations such as bias, privacy, and fairness?

- Continuous Learning: How will you stay informed and continuously learn about advancements in AI?

- Transparency: Why is transparency in AI testing tools and processes essential?

- User-Centricity: How will you ensure AI testing ultimately enhances software quality and delivers a positive user experience?

-

Share Your Manifesto: Reply to this post with your AI in Testing Manifesto. If you’re comfortable, share the rationale behind the principles you’ve outlined and how they aim to shape your approach to AI in testing. Why not read the manifestos of others and like or comment if you found them useful or interesting.

-

Bonus Step: If you are free between 16:00 - 17:00 GMT today (21st March, 2024), join the Test Exchange for our monthly skills and knowledge exchange session. This month there will be a special AI in Testing breakout room.

Why Take Part

-

Refine Your Mindset: The process of developing your manifesto encourages a deep reflection on the mindset needed to work successfully with AI.

-

Shape Your Approach: Creating your manifesto helps solidify your perspective and approach to AI in testing, ensuring you’re guided by a thoughtful framework.

-

Inspire the Community: Sharing your manifesto offers valuable insights to others and contributes to the collective understanding and application of AI in testing.